For v.4.0: This time I trained my model with many more images (1887 to be exact). It is a pruned model. It doesn't require vae but you can use it if you want. Clip skip was trained with 1, but it gives successful results with 2 as well. (I recommend using negative embeddings to avoid burning so you can get great results)

If you want to support my work, you can buy me a coffee. Thanks in advance to those who support.

For v.3.0: Let me describe what I did. I don't speak English, I hope I can explain. All my previous versions were merged models. In this model, I trained three different models with three different folders containing 188 photos, each image having 150 steps (epoch). The photos in the three folders were also different from each other. I couldn't decide which model was the most successful. All three gave very good results. So I finally combined these three models and published them as a single model. So it went through merging first, then training, then merging again. But the most time-consuming and tiring part was the training part. Since I did not have the opportunity to choose both options, I chose this option to emphasize that it has undergone training.

I am sharing the photo package I used for training with you. Those who want can create their own experience by downloading it: link

-*- Let me give you a tip... A model tends to whatever style it was last trained with. You can test it as follows: generate 10-15 times with default settings (20 steps, 7 scale and 512x512 resolution (for 1.5 models)) without writing any negative or positive prompts. (If you want to make the images look clearer, you can write negative prompts provided that you do not use negative embedding). Whichever type of results you got the most in this test, it means that the trend of the model is in that direction -*-

For v1.0: Like my previous anime model, this one consists of several models mixed with each other in certain proportions. I give the list of the mixture below, but I will not specify the mixing ratios. I would like to thank those who created these models.

Ideal for creating ultra-realistic images. Some words can force the model to run nsfw. When the resolution is increased with Hires fix, it creates magnificent visuals. You may have problems with faces in smaller size images. In such cases, you can use adetailer applications such as almostiler.

No need to use vae.

I recommend increasing the resolution by using hires fix instead of using restore faces.

It will continue to be developed.

描述:

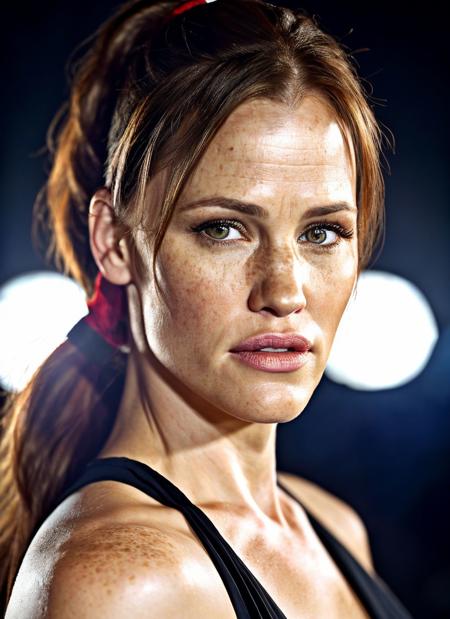

This is my first training attempt... I trained my model with more than 500 photos. The photographs I used were mostly portraits of beautiful women that I chose myself. I've seen it do quite well on models with red hair and freckles. I would appreciate if you share your experiences so that I can improve it further.

-place the yaml file next to the model file-

I am sharing the photo package I used for training with you. Those who want can create their own experience by downloading it: link

训练词语:

名称: beautyfoolReality_v30.safetensors

大小 (KB): 2082652

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success

名称: beautyfoolReality_v30.yaml

大小 (KB): 2

类型: Config

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success