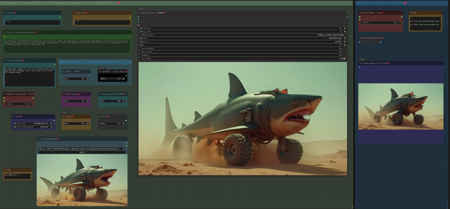

Workflow: Image -> Autocaption (Prompt) by Florence -> LTX Image to Video with STG

-

Creates up to 10sec clips in less than 1min. Confirmed working on 8gb VRam.

Teacache Version added to Experimental Tab, reduces process time by about 40%, requires testing regarding impact on quality. (GGUF and GGUF+TiledVAE+ClearVram version)

Last update: Jan 20th 2025 (MaskedMotionBlur workflow updated with Teacache)

V6.0: GGUF/TiledVAE Version & Masked Motion Blur Version

Updated the workflow with GGUF Models, which save Vram and run faster.

There is a Standard Version, which uses just the GGUF Models and a GGUF+TiledVae+Clear Vram Version, that reduces Vram requirements even further. Tested the larger GGUF model (Q8) with resolution of 1024, 161 frames and 32 steps , the GGUF Version peaked Vram usage at 14gb, while the TiledVae+ClearVram Version peaked at 7gb. Smaller GGUF Models might reduce requirements further.

GGUF Model, VAE and Textencoder can be downloaded here:

(Model&VAE): https://huggingface.co/calcuis/ltxv-gguf/tree/main

(anti Checkerboard Vae): https://huggingface.co/spacepxl/ltx-video-0.9-vae-finetune/tree/main

(Clip Textencoder): https://huggingface.co/city96/t5-v1_1-xxl-encoder-gguf/tree/main

You can go for the GGUF Version with 16gb+ and the TiledVae+ClearVram with less than 16gb Vram.

Masked Motion Blur Version: Since LTX is prone to motion blur, added an extra group to the workflow which allows to set a mask on input image, apply motion blur to mask, to trigger specific motion. (sounds better than it actually works, useful tho in some cases). GGUF and GGUF+TiledVAE+ClearVram version included.

V5.0: Support for new LTX Model 0.9.1.

-

included an additional workflow for LowVram (Clears Vram before VAE)

-

added a workflow to compare LTX Model 0.9.1 vs LTX Model 0.9

(V4 did not work with 0.9.1 when the model was released (hence v5 was created), this has changed as comfy & nodes were updated in the meantime, now you can use both Models (0.9 & 0.9.1) with V4, also with V5. Both have different custom nodes to manage the model, other than that, both versions are the same. If you run into memory issues/long process time, see tips at the end)

V4.0: Introducing Video/Clip extension :

Extend a clip based on last frame from previous clip. You can extend a clip about 2-3 times before quality starts to degenerate, see more details in the notes of the worflow.

Added a feature to use your own prompt and bypass florence caption.

V3.0: Introducing STG (Spatiotemporal Skip Guidance for Enhanced Video Diffusion Sampling).

Included a SIMPLE and an ENHANCED workflow. Enhanced Version has additional features to upscale the Input Image, that can help in some cases. Recommend to use the SIMPLE Version.

-

replaced the height/width Node with a "Dimension" node that drives the Videosize (default = 768. increase to 1024 will improve resolution, but might reduce motion, also uses more VRAM and time). Unlike previous Versions, Image will not be cropped.

-

Included new node "LTX Apply Perturbed Attention" representing the STG settings (for more details on values/limits see the note within the workflow) .

-

Enhanced Version has an additional switch to upscale Input Image (true) or not (false). Plus a scale value (use 1 or 2) to define the size of the image before being injected, which can work a bit like supersampling. As said, not required in most cases.

Pro Tip: Beside using the CRF value at around 24 to drive movement, increase the frame rate in the yellow Video Combine node from 1 to 4+ to trigger further motion when outcome is too static.

Node "Modify LTX Model" will change the model within a session, if you switch to another worklfow, make sure to hit "Free model and node cache" in comfyui to avoid interferences. If you bypass this node (strg-B) , you can do Text2Video.

V2.0 ComfyUI Workflow for Image-to-Video with Florence2 Autocaption (v2.0)

This updated workflow integrates Florence2 for autocaptioning, replacing BLIP from version 1.0, and includes improved controls for tailoring prompts towards video-specific outputs.

New Features in v2.0

-

Florence2 Node Integration

-

Caption Customization

-

A new text node allows replacing terms like "photo" or "image" in captions with "video" to align prompts more closely with video generation.

-

V1.0: Enhanced Motion with Compression

To mitigate "no-motion" artifacts in the LTX Video model:

-

Pass input images through FFmpeg using H.264 compression with a CRF of 20–30.

-

This step introduces subtle artifacts, helping the model latch onto the input as video-like content.

-

CRF values can be adjusted in the yellow "Video Combine" node (lower-left GUI).

-

Higher values (25–30) increase motion effects; lower values (~20) retain more visual fidelity.

-

Autocaption Enhancement

-

Text nodes for Pre-Text and After-Text allow manual additions to captions.

-

Use these to describe desired effects, such as camera movements.

-

Adjustable Input Settings

-

Width/Height & Scale: Define image resolution for the sampler (e.g., 768×512). A scale factor of 2 enables supersampling for higher-quality outputs. Use a scale value of 1 or 2. (changed to dimension node in V3)

Pro Tips

-

Motion Optimization: If outputs feel static, incrementally increase the CRF & frame rate value or adjust Pre-/After-Text nodes to emphasize motion-related prompts.

-

Fine-Tuning Captions: Experiment with Florence2’s caption detail levels for nuanced video prompts.

-

If you run into memory issues (OOM or extreme process time) try the following:

-

use the LowVram version of V5

-

use a GGUF Version

-

press "free model and node cache" in comfyui

-

set starting arguments for comfyui to --lowvram --disable-smart-memory

-

see the file in your comfyui folder: "run_nvidia_gpu.bat" edit the line: python.exe -s ComfyUI\main.py --lowvram --disable-smart-memory

-

-

switch off hardware acceleration in your browser

-

Credits go to Lightricks for their incredible model and nodes:

https://github.com/Lightricks/ComfyUI-LTXVideo

https://github.com/logtd/ComfyUI-LTXTricks

描述:

GGUF Version

MaskMotionBlur Version

训练词语:

名称: ltxIMAGEToVIDEOWithSTG_v60.zip

大小 (KB): 2014

类型: Archive

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success