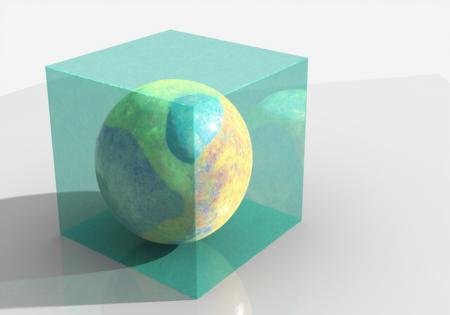

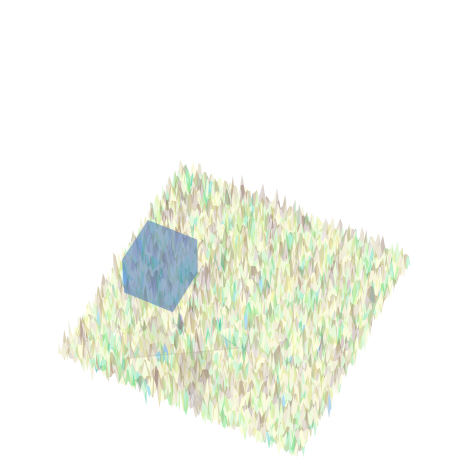

Trained on 3D sequences created with Python. Experimental image anchoring concept.

The information in this PDF is highly detailed and specific to neuroscience, particularly in studying the circuits in the retrosplenial cortex (RSC) of mice and their roles in spatial cognition and memory. Here’s a breakdown of relevant ideas that might be adaptable for enhancing a dataset for a LoRA aimed at improving visual/spatial capabilities in image generation models:

1. Spatial and Structural Differentiation:

- The PDF highlights distinct circuits within the RSC that handle spatial information differently, depending on the target regions they project to (like secondary motor cortex and anterodorsal thalamus). In a LoRA dataset, you could simulate this idea by having images that show different spatial arrangements and orientations of objects, mimicking distinct pathways or perspectives. For instance, varied depths, object sizes, and viewpoints could represent different "projection-specific" perspectives in 3D space.

2. Environmental Contexts and Spatial Landmarks:

- The RSC is involved in tasks like object-location memory and place-action association, where the spatial relationship between an object and its environment is crucial. For your LoRA, including variations in environmental context (such as background gradients, floor patterns, or spatial grids) and objects positioned in relation to "landmarks" (central or offset points) could help develop a more nuanced understanding of spatial relationships.

3. Layered, Semi-independent Circuits:

- Just as the RSC neurons have semi-independent circuits with distinct roles, a LoRA dataset could feature layers of information that interact without fully merging. For example, using transparent overlays, wireframes, or shadow layers with different intensities or colors can mimic layered, semi-connected visual features, enhancing depth and dimensionality.

4. Sensory Input Variability:

- The PDF describes how different RSC circuits receive various types of sensory inputs (such as from visual, auditory, or somatosensory sources). Translating this to a visual dataset might mean creating samples that incorporate texture and visual cues across sensory "modes" – some with high detail for texture (resembling somatosensory input), others with color gradients or atmospheric effects (resembling visual or auditory input processing).

5. Object-Location Memory Representation:

- Including variations where objects change positions relative to a fixed background across sequential images could mirror the concept of memory and recognition of changes in spatial layout. These subtle shifts might train the model to detect and remember spatial relationships across images, improving its response to prompts involving positioning and continuity.

6. Complex Object and Shadow Interactions:

- The study used tasks that involved moving objects to different locations to test memory and recognition. For your dataset, experimenting with floating objects that cast realistic shadows could simulate depth perception and occlusion. Shadows could change position or sharpness to indicate object movement or shifting light sources, thus enhancing spatial interpretation skills in generated images.

These principles could guide the design of a structured dataset that feeds visual-spatial information to the LoRA, potentially enhancing its ability to understand and generate images with spatial depth, orientation, and complex layering.

---

And so that is what I tried to do roughly.

Sample image from my dataset:

描述:

训练词语:

名称: 1065777_training_data.zip

大小 (KB): 2474

类型: Training Data

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success

名称: Image_Positioner_3d_Sequences.safetensors

大小 (KB): 18854

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success