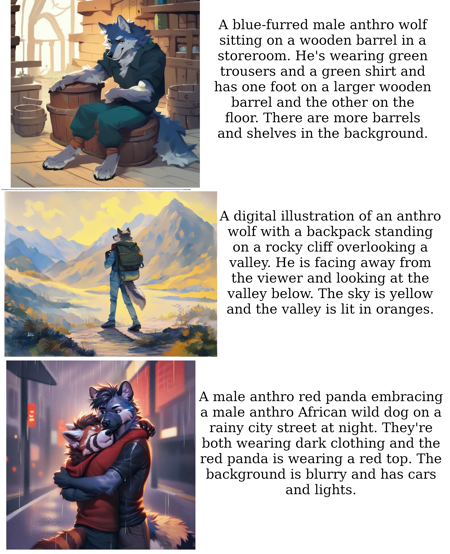

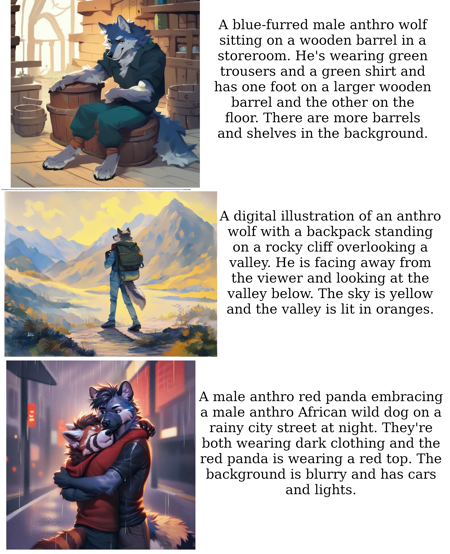

This is an extremely experimental image captioning model based on moondream for furry images, including NSFW ones, which can handle humans and similar too. Please note: this is not a text to image model, it takes existing images and creates text descriptions for them. It's also unreliable and tends to hallucinate NSFW details in images that aren't actually there. Also, please read the warning further down if upgrading or using older versions.

At the time of release I wasn't aware of any publicly available captioning models for furry content so I released it in its current state, even though it will likely require substantial fine tuning and manual editing of the generated descriptions. Since then, an alpha version of JoyCaption has been released which may provide better results depending on your needs. However, I'm still doing the occasional new release of this as and when I manage to come up with improvements. Note that while newer releases should be better overall, they unfortunately do seem to regress in some cases.

To make use of this model you'll need to install moondream and unpack this model into that directory. (I recommend setting up a venv or conda environment before doing this because it installs very specific Pytorch and transformers versions and may not work correctly with the latest version):

git clone https://github.com/vikhyat/moondream

cd moondream

git checkout 281074b9e488d142fba86760c7b606a1866acf3f

pip install -r requirements.txt

unzip yiffydreamImage_20241231.zipNow you can point the included batch captioning script at a directory of images and it will add .caption files for any images that don't have them already:

python3 batchcaption.py myimagedirectory/Important warning: there's a stupid bug in the captioning script before 20240826 where it completely ignores the prompt and doesn't use one. (" return prompt" was missing at the end of the definition of _prompt_with_tags". Whoops.) This doesn't make a huge difference when you just want to generate a batch of captions with those releases and aren't doing anything fancy (in fact all my testing was done this way), which is why I missed it for a long time. The older script may not work right with newer releases though and I'd recommend just using the fixed version in general. Sorry about that.

In the 20241231 release, you can also very experimentally enable the --usetags option to try and use human-written tags to guide the captioning. This will look for a matching .tags file for every image which should contain comma-seperated tags (so 123.jpg should have a 123.tags file that could contain for example 1girl, bikini, blonde hair, etc...) If any images are missing tags it will just generate a caption the usual way. Be sure to use an up-to-date version of batchcaption.py if enabling this feature.

The generated captions are likely to vary a lot in quality, with interactions between multiple characters particularly poor. You can output them to a jsonl file instead with the --outfile argument too if that's more useful to you. I've also included a quick fine tuning script.

描述:

训练词语:

名称: yiffydreamImage_20241231.zip

大小 (KB): 3278486

类型: Archive

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success