MOCHI | Test bench with fast

This workflow is focused on getting videos as fast as possible out of Mochi.

I'm not a developer, programmer, problem solver, or Python expert. I'm simply sharing my findings that I believe MUST be shared, for free, here. So please, don't complain with me if something doesn't work FOR YOU. I'll do my best to help but chances are that 99% of the time I'll be using Google to find solutions for your problems. You can do that too.

V4:

-

Updated workflow with new nodes. V1-2-3 are now obsolete. I'll just keep old versions here cause Civit is clumbsy and keep previews only on old versions tabs.

-

more refinements and controls

V3:

-

Added more control after I found is possible to lower minimum frames to 7 (wich also require lower cfg and raise prompt strenght)

now is possible to get small very low quality previews in less than 10 seconds! *

-

found that VAE tiling is not necessary under 49 frames and medium settings,

this automatically solve the overlap tiles issue

-

found best combination that works faster, at least for me (3090) wich is:

bf16 + clip t5xxl_fp8_e4m3fn_scaled

thanks to Driftjohnson and his studies on this model, check him out

4. general workflow clean up

* Please note that the UI now allow to go down to 7 and 13 frames, as well as set steps to a value below 20. this values are intentionally added only for quick prompting and have a fast rough idea of what the final result might look like once those values are increased.

Therefore, do not expect a coherent result with these values, but rather a rough approximation jam, which is still useful for finding the right prompt, composing the scene, and conducting prompt researches as quickly as possible.

Once the right prompt is found, both steps and frames should be increased!

V2:

-

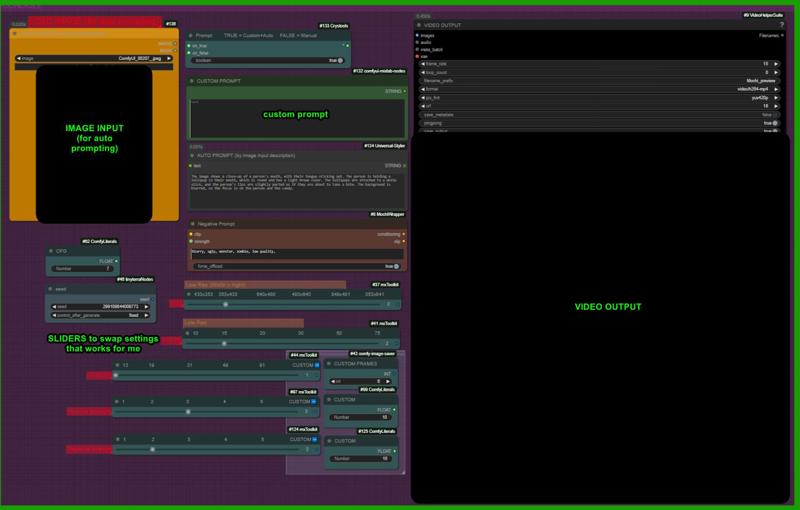

Added image load for auto prompting

-

better settings for faster tests

*you should lock also Florence seed if you want to work on same seed,i forgot to add that?sry

*you should lock also Florence seed if you want to work on same seed,i forgot to add that?sry

*i'm having some bugs with few nodes that keep disconnecting and reconnecting in other places. this may cause height and width mismatch for you also. no idea. please let me know.

________________________________________________________________________________________________

WHAT IS THIS ABOUT?

Various settings I’ve tested, organized in a handy UI for quick swap.

A set of sliders allow to swap between low/high res settings i found usefull.

A short video at the lowest acceptable quality can be created 1 minute and 30 seconds (on a 3090), for testing and prompt exploration.

Of course you are wellcome to change any settings (steps, frames, size).

These are just what i found that worked better in my opinion, focusing on speed and values compatibilties

This model is still quite new.

The values marked in yellow with the 'Low Res' bar are the ones I recommend for QUICK TESTING AND PROMPT EXPLORATION. They seem optimal for generating a video as quickly as possible at the lowest quality. Below these values, MOCHI becomes practically unusable. I believe it’s essential to explore this model’s potential quickly. You can use the sliders above, set to the 'Low Resolution' option in yellow, to get a rough idea of the final output. Afterward, increase the resolution to higher values. This approach is necessary because the model is heavy and very slow… testing its capabilities at a low setting is highly useful

For the Installation I followed this guide

The workflow is a modified version of this: https://civitai.com/models/886896

描述:

Added image load for auto prompting

found better settings = faster

训练词语:

名称: fastMOCHITextToVideo_v20.zip

大小 (KB): 8

类型: Archive

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success