This workflow generates a flux image with a consistent character at 1.15s/it on a 4060Ti (on 2nd generation onwards, if the compilation of the model is enabled). You will need 16GB VRAM for this workflow. It uses the fp8 flux models because the gguf modes do not work well with torch.compile. Please setup the environment as per the instructions below if you are getting errors.

-

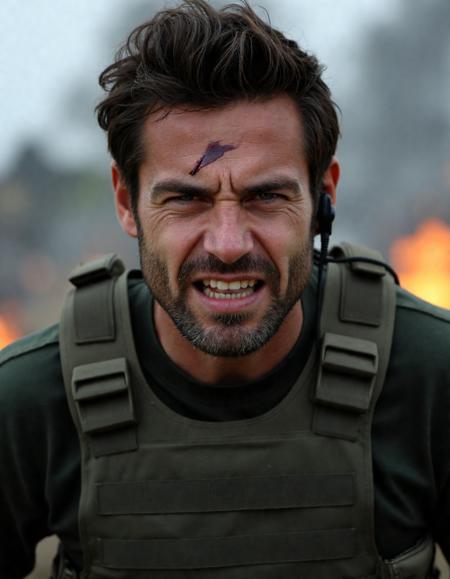

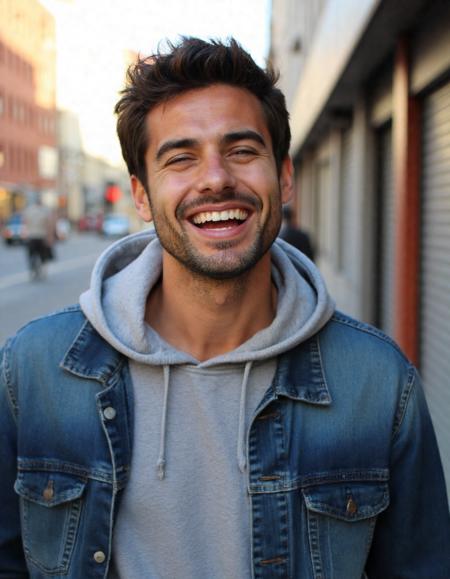

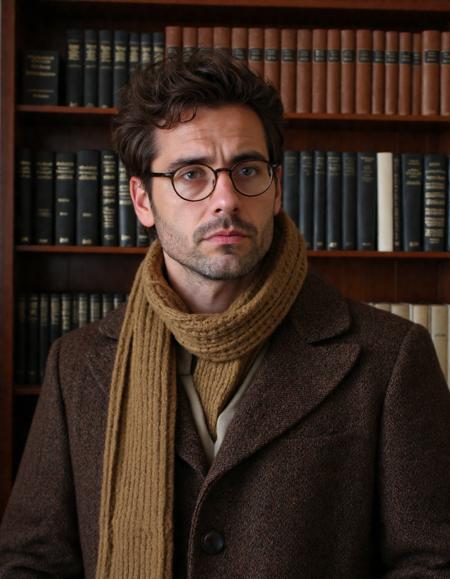

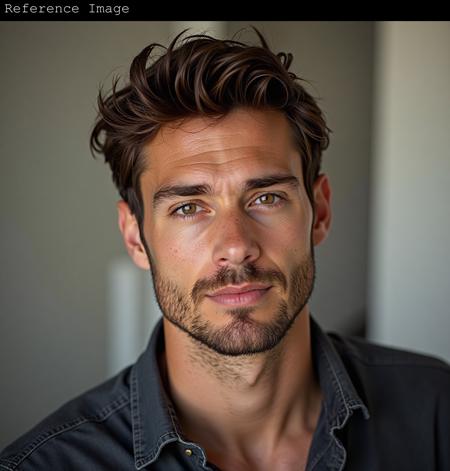

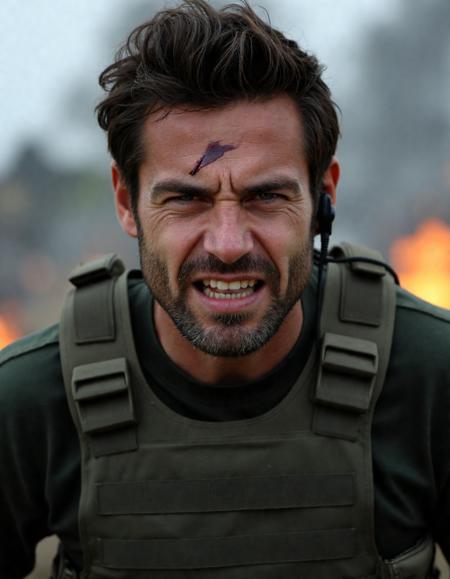

PuLID for character consistency. Please note that users have reported that PuLID works well on AI generated characters but do not work well on real photographs.

-

FLUX.1-dev-ControlNet-Union-Pro controlnet (need to use Q2_k flux gguf for controlnet on 16GB VRAM)

-

TorchCompileModel beta node, with Kijai's patch, used to compile the model, this will increase the time required for the first generation but speed up subsequent generations.

-

weight_dtype is selected to be fp8_e4m3fn_fast to take advantage of fp8 matrix multiplication for faster generation on Nvidia 40 series graphics card. If you are on an older card, select fp8_e4m3fn.

Environment

I have tested this workflow using the exact environment below, so if you are having troubles, try setting up the environment using the following steps:

-

Download and install Python 3.10.11 (https://www.python.org/downloads/)

-

Download and install Cuda 12.4 (https://developer.nvidia.com/cuda-12-4-0-download-archive) and cuDNN Version Tarball, CUDA Version 12 (https://developer.nvidia.com/cudnn-downloads). cuDNN is required for nodes like ReActor that use Onnx, this is why I have it installed anyway.

-

Clone the latest comfyui using: git clone https://github.com/comfyanonymous/ComfyUI.git

-

Change directory into the comfyui directory

-

python -m venv .venv

-

.venv\Scripts\activate.bat

-

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

-

install comfyui requirements: pip install -r requirements.txt

-

Download triton 3.1.0 for python 3.10 (triton-3.1.0-cp310-cp310-win_amd64.whl

) from here: https://github.com/woct0rdho/triton-windows/releases into your comfyui folder and install using pip install triton-3.1.0-cp310-cp310-win_amd64.whl

-

Run comfyui using: python.exe -s .\main.py --windows-standalone-build

Models

-

flux_dev_fp8_scaled_diffusion_model.safetensors (models/diffusion_models/flux): https://huggingface.co/comfyanonymous/flux_dev_scaled_fp8_test/tree/main

-

t5xxl_fp8_e4m3fn_scaled.safetensors (models/clip): https://huggingface.co/comfyanonymous/flux_text_encoders/tree/main

-

ViT-L-14-BEST-smooth-GmP-TE-only-HF-format.safetensors (models/clip): https://huggingface.co/zer0int/CLIP-GmP-ViT-L-14/tree/main

-

pulid_flux_v0.9.1.safetensors (models/pulid): https://huggingface.co/guozinan/PuLID/tree/main

-

antelopeV2 (models/insightface/models/antelopev2): download antelopev2.zip from https://huggingface.co/MonsterMMORPG/tools/tree/main and unzip. You should have all the antelopeV2 model files in the models/insightface/models/antelopev2 folder.

-

FLUX.1-dev-ControlNet-Union-Pro (models/controlnet): https://huggingface.co/Shakker-Labs/FLUX.1-dev-ControlNet-Union-Pro/tree/main download diffusion_pytorch_model.safetensors and rename to FLUX.1-dev-ControlNet-Union-Pro.safetensors

-

Flux VAE: install with manager

Custom Nodes

-

ComfyUI-GGUF: only for 16GB VRAM users who want to use controlnet

-

rgthree's ComfyUI Nodes

-

KJNodes for ComfyUI

-

ComfyUI-PuLID-Flux-Enhanced

描述:

Added Amateur Photo LoRA for more realism

Added Kijai's patch for LoRAs to work with torch.compile

训练词语:

名称: flux1DevConsistentCharacter_v30.zip

大小 (KB): 6

类型: Archive

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success