This repository provides a Inpainting ControlNet checkpoint for FLUX.1-dev model released by AlimamaCreative Team.

News

? Thanks to @comfyanonymous,ComfyUI now supports inference for Alimama inpainting ControlNet. Workflow can be downloaded from here.

ComfyUI Usage Tips:

-

Using the

t5xxl-FP16andflux1-dev-fp8models for 28-step inference, the GPU memory usage is 27GB. The inference time withcfg=3.5is 27 seconds, while withoutcfg=1it is 15 seconds.Hyper-FLUX-loracan be used to accelerate inference. -

You can try adjusting(lower) the parameters

control-strength,control-end-percent, andcfgto achieve better results. -

The following example uses

control-strength= 0.9 &control-end-percent= 1.0 &cfg= 3.5

InputOutputPrompt The model was trained on 12M laion2B and internal source images at resolution 768x768. The inference performs best at this size, with other sizes yielding suboptimal results. The recommended controlnet_conditioning_scale is 0.9 - 0.95. Please note: This is only the alpha version during the training process. We will release an updated version when we feel ready. Compared with SDXL-Inpainting From left to right: Input image | Masked image | SDXL inpainting | Ours Step1: install diffusers Step2: clone repo from github Step3: modify the image_path, mask_path, prompt and run Our weights fall under the FLUX.1 [dev] Non-Commercial License. 名称: alimamaCreativeFLUX1Dev_v10.safetensors 大小 (KB): 4181449 类型: Model Pickle 扫描结果: Success Pickle 扫描信息: No Pickle imports 病毒扫描结果: Success

Model Cards

Model Cards

Showcase

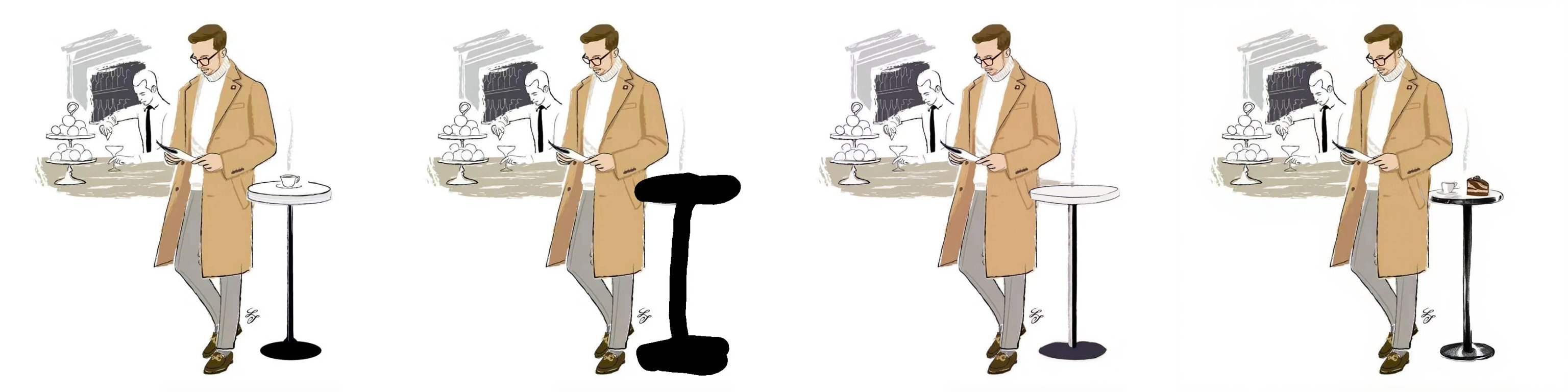

Comparison with SDXL-Inpainting

Comparison with SDXL-Inpainting

Using with Diffusers

Using with Diffusers

pip install diffusers==0.30.2

git clone https://github.com/alimama-creative/FLUX-Controlnet-Inpainting.git

python main.py

LICENSE

描述:

训练词语: