This is a continuation of the research here https://civitai.com/articles/6792 using my own dataset and approach.

I'm working on creating an updated guide to flux captioning and this is part of the work towards that will cover more nuanced approaches to training.

But for now, for people who are interested I'll go into a brief description of the direction I'm heading and the kinds of results I'm finding.

First of all there seems to be a misconception between which part of the dataset is most important. Many people would claim that training without any captions, or on short triggers is the most effective methodology and that the only real method to improve this is via stacking more high quality images.

But actually what I'm finding is that this is in itself leading moreso to overfitting, especially when used with repeats.

This idea all started a few months ago, with SDXL, when WarAnakin spoke to me about the results of some of his research in the area. He'd found through sheer trial and error across multiple attempts that one of the leading causes of overfitting was actually not having images that are too similar, but actually even having a single image with the same or close enough captions to another image in the set - even in the case where such images are vastly different - was causing much more direct and noticeable impacts on results.

Since then I've been experimenting with various different approaches with SDXL and found that despite SDXL being a BITCH to actually train, compared to SD1.5, results were significantly improved and fit much better with the existing data already present in the models.

In simplistic terms, instead of using repeats in the classical sense, instead of using caption dropout and caption shuffling in a totally randomized manner, the idea is that we use tools, like chat GPT, to expand the number of variations of captions per image. Creating copies of those images to pair with the new variant captions.

Having a subset of copies of the images without any captions, a set with just trigger words, A set captioned with tags and a set captioned with natural language descriptions.

In this case, this particular experiment has taken that one step further.

What would happen if we restricted the dataset down to only 20 unique images and were to create ~30 different captions per image instead of 3?

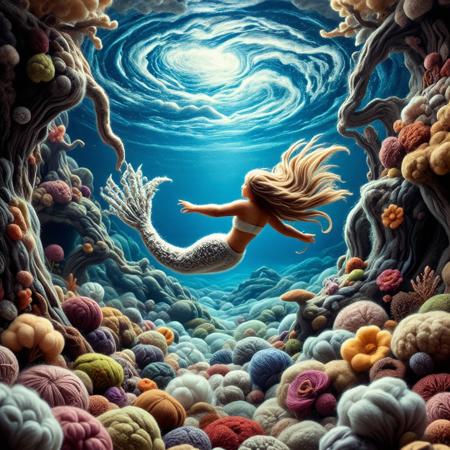

And then we find ourselves here. After 20 epochs, I went through to test which ones were the most prompt adherent, and maintained the style most closely and found that a combination of Epochs 7 + 16 seemed to produce the best results.

However, there is a slight caveat here. While the results have been promising, the experiment is far from over AND I've been conducting it using CIVITAI's online trainer. Therefore, though I can train locally, for the purposes of continuing the research in a slightly more controlled manner, I've placed this resource into early access for a small one-time buzz exchange until a goal is met and the research is able to be continued. Or at least I would like to have. I don't seem to have been given the option to do so.

The next steps would involve expanding the dataset to cover more subjects, perhaps going from 20 to 40 images in total, but this time covering more people, animals and objects.

描述:

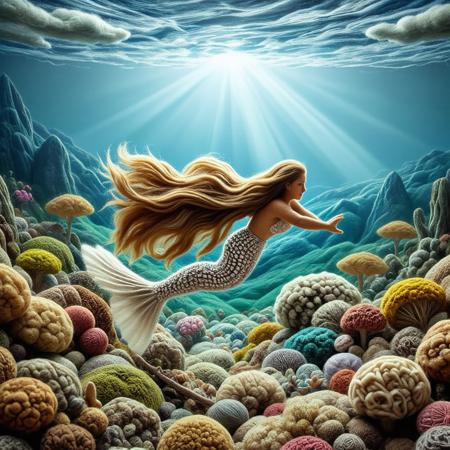

训练词语: Woolen,wooly,felted

名称: 764830_training_data.zip

大小 (KB): 97006

类型: Training Data

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success

名称: loras.zip

大小 (KB): 117612

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success

名称: WoolyFluxTry3-000007.safetensors

大小 (KB): 75143

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success