The training of this model and the images it generates are solely for learning purposes.

I did nothing, I'm just a porter.

This model is more like a character pack, and its side effect is the style it brings.

It took more than 30 hours of repeated attempts, during which I almost gave up, but in the end, I achieved a more balanced effect. Most importantly, my training hypothesis was verified. In the future, I might organize these experiences into an article.

But bad hands issues still exist.

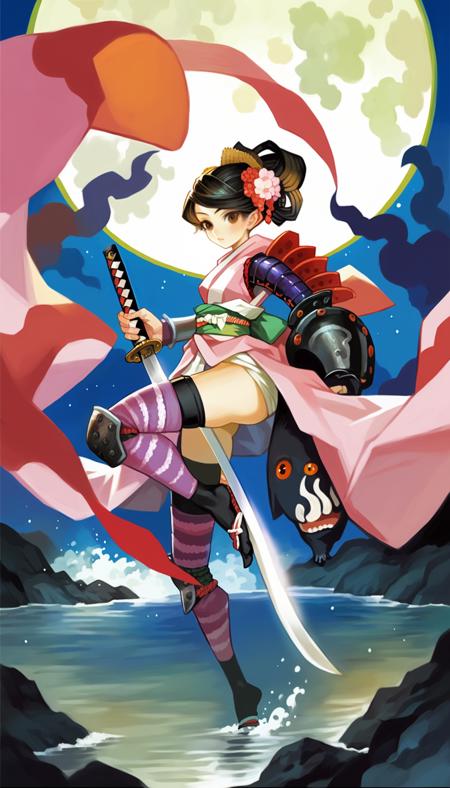

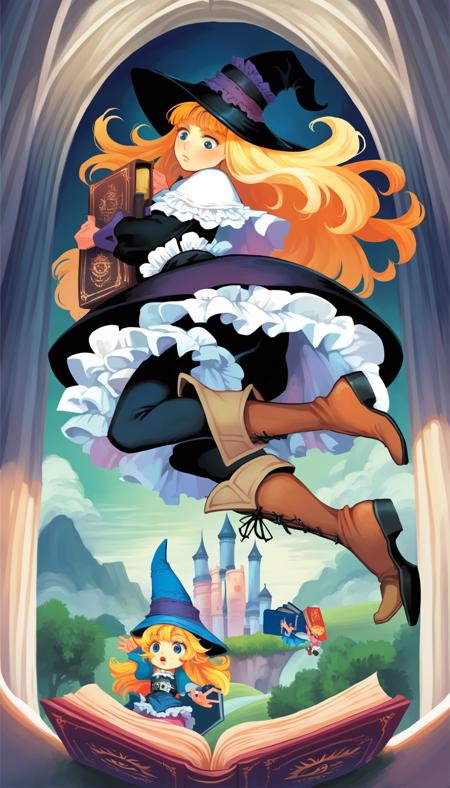

Trigger word: vanillastyle

You could find the example prompts from the images above.

The prompt pf the previous version model mostly worked too.

My prompts are basically composed in the order of [character traits] + [style] + [expression] + [clothing] + [camera and action] + [background], and you can delete or modify them as needed.

If there is a particularly blurry situation, consider adding "thumbnail" to the negative prompt and increasing its weight until the image becomes clear.

Adding '3d' to negative prompt may get a better result, While adding such as 'realistic', 'realism' tag could enhance the feature of the figure.

Recommended weight: 1.0~0.6, adjust as needed until the character's appearance meets your requirements.

Upscale value recommendation is around 1.2~2.0 , denoising strength is 0.2

The dataset was mainly focus on the works of George Kamitani.

20240907v0.2

In this version, I tagged more images, and for the rest, I removed their tags, leaving only the trigger words to prevent conflicts with the carefully tags. (This method may be wrong.)

During the training process, there were too many instances where the images in the dataset were not accurately represented through the prompt. I tried changing various tags and retraining, with the same result. The repetitiveness of these images in the dataset is also not high, lacking continuity.

Finally, I read an article that mentioned increasing the number of training repetitions for certain characters to prevent the model from not learning these images sufficiently.

So, I placed all the single-existing images in the dataset into a subfolder, set the training repetitions to 2, and left the images that were already well-learned unchanged.

However, since there are quite a few quality issues with these discontinuous images, and I have not repaired them for the time being, increasing their training repetitions has had a certain impact on the overall style.

For the next version to improve, the most fundamental approach is to enhance the quality of the dataset, and also to make good use of captioning techniques, adding the same tag to those slightly lower-quality images, and then placing them all in the negative prompt when running the model.

20240715v0.1

This model can only be considered as v0.1, it is not very easy to use normally, and I think it is best to tagging more images in the dataset in detail for better results. In the future, I may slowly complete the training of this model.

The performance of this version is not very good, the images it generates may often appear chaotic.

I collected 100+ images as a dataset, but the number is still too much for manual tagging. I initially used wd1.4 to tagging all the images, but the quality of the tagging is still no good, (maybe my usage is not correct enough, and I welcome everyone to make suggestions).

Because I want to see the results quickly, for this dataset, I only manually tagging some images that meet my personal preferences, so the model's output effect will be better for these images.

描述:

训练词语:

名称: vanillawareStyle.safetensors

大小 (KB): 223106

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success