Before to use

-

You have to know how works Stable Diffusion. I recommend using ComfyUI or Automatic1111 like an interface to launch the model. Civitai doesn’t read the ComfyUI metadata, so you are welcome to experiment with it, and also consider that ComfyUI system is different from Automatic1111 (CFG, Steps, and configuration). Today I’m not using anymore Automatic1111, so these examples here are ComfyUI based. I tried to make a simple translation of the metadata.

-

This is a block-merge model based on XL models. I had to make so much testing before to arrive to a desirable generation. You have to consider that SD1.5 system is way different from SDXL, you can use both versions, but consider that.

-

This is a Checkpoint dataset, not a Lora.

-

It is a merge, so consider that it could sometimes generate easily NSFW images. Add in the negative prompt "(nudes:0.1)" or just "nudes", but this could not help always, it is just something of some model training.

-

I recommend you to follow me on my Instagram account, where I will explain about AI image generation: https://www.instagram.com/eddiemauro.design/

Intro

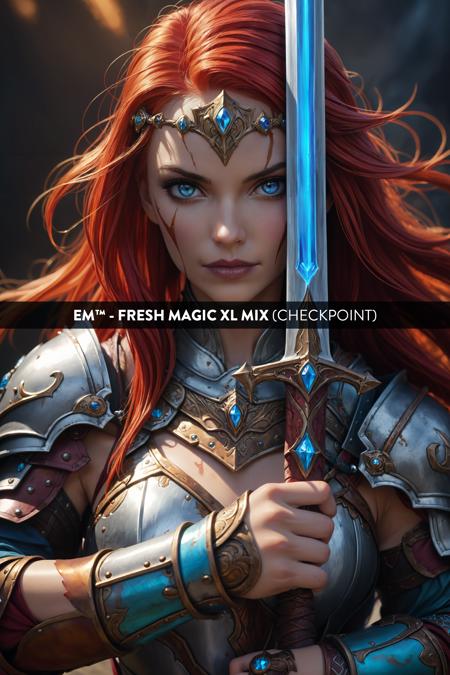

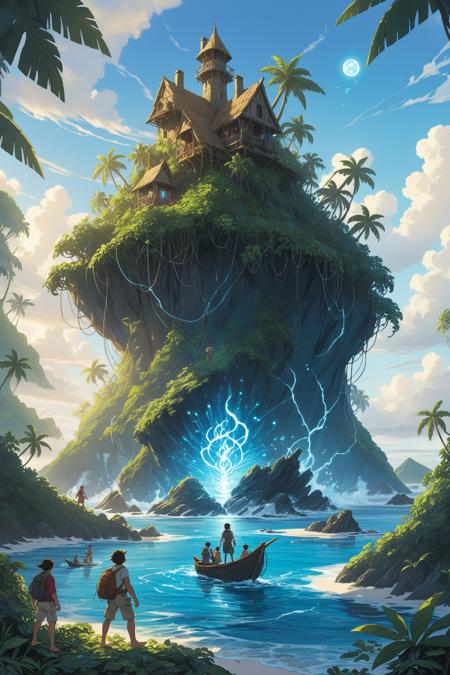

FRESH MAGIC (digital and 3d-eddiemauro mix) CHECKPOINT: Hi, I’m a product and car designer, and I’m so excited to test with AI, I think is a good tool for designing. It is a merged model that I created to facilitate my creations, and it has a SD1.5 version.

v1: It is a good model for fantasy, sci-fi and digital illustration. It is good with a “real” or “middle-real” (not ultra realistic) representation (3d render) and also with flat graphic style (but it is not created for that, in that case, use the FreshDraw variant). The idea was to create a "general model" for digital illustration focused on 3d looking and “realistic” art representation. It was created by combining some models here from civitai with block way.

v1 Lightning: Same than v1, but I consider that is better the normal model in quality. This model is faster in generation, and it tends to put so much details inside generations. This will work just with some samplers, like: DPM++SDE with scheduler: SIMPLE, HEUN +scheduler: SIMPLE. Steps from 4 to 6, and CFG from 1 to 2.

You can compare the differences between Magic and Draw models:

https://miro.com/app/board/uXjVKvtvAYY=/?share_link_id=995366256638

If you want to support my work and help me to upload more models (with better quality), you can do it by entering here and donating, I would greatly appreciate it: https://ko-fi.com/eddiemauro

Installation

-

I use ComfyUI, the best UI for Stable Diffusion image generation, so I recommend you to install locally or use it online with some Colab or other hosting. You can find online instructions or videos to do that.

-

You have to install the Checkpoint model to use. Put the file inside “Checkpoints” folder.

-

Please for image creation you have to follow some small recommendations, but please don't hesitate to experiment, XL system is more precise and interesting.

Recommendations for image generation

-

Activation token/caption: Inside prompt you can whatever you want. I use just the description of the image that I have, and in the positive space, “masterpiece”. If you want a more realistic representation, just put in the positive “realistic”, or if you want other style like illustration, anime or cartoon, just put these words.

-

I recommend using “Wildcards” for prompt experimentation. Install any Node for ComfyUI, that works with Wildcards. This will put more creativity to your creations.

-

Textual inversion/embedding or Lora tool recommended: You can use whatever Lora or Embedding for XL system, for positive or negative. For negative prompt, I use: worst quality, text, artifacts, strabismus. If you want to put more realism, just put the opposite word inside the negative prompt.

-

VAE: This model is baked with VAE, and mostly the majority of the models are. But in case you wish to use the VAE, it works with the normal XL VAE.

-

Clip Skip: Use 2 or 1. Sometimes is better defined or image will have more contrast with 2, but 1 works nice (this one will create a more realistic looking).

-

Steps and CFG: It is recommended to use Steps from “20-40” and CFG scale from “4-6”.

-

Sampler: I use mostly “EulerA - Normal” or “DPM++SDE - Karras”. Euler tends to be simpler and more creative, DPM put more details, but in SDXL, really there is no so much difference sometimes. Make experimentation with other samplers if you like. For Lightning, is mandatory to use: DPM++SDE with scheduler: SIMPLE, HEUN +scheduler: SIMPLE. Steps from 4 to 6, and CFG from 1 to 2; also works DPM++SDE GPU +scheduler: SIMPLE or DPM++2M SDE +scheduler: SIMPLE.

-

Batch: In txt2img try to put a value of 4 to generate more than 1 image and watch the generations. If you have a good graphic card, you can use “Batch size”, this will create at same time 4 images, increasing generation time; but if your computer cannot handle this, change to “Batch count” that will create 4 images in a row (not a same time), but generation time will be more.

-

Image aspect: Try to use these dimensions: 1024x1024, or image size or aspects, related to 768 or 1024 on one or both sides. For example, I use the proportion of 768x1152. Then, for upscaling a rate of 1,5.

-

Create bigger images: There are 4 different methods to create large images in Stable Diffusion, you can check online how to. For the first method “txt2img hires.fix”, I recommend you to use upscale model called “4x-UltraSharp”, downloading here just “.pth” file, and then installing it, putting inside “ESRGAN” file. In hires.fix option put any “upscale by” value, and then with a “denoise strength” of “0.4-0.7”. For the second method, you have to select first the image generated in txt2img and then putting in img2img mode, increasing dimension at least “1,5 times” with a “denoise strength” from “0.4-0.7”. For the third method, you can use “tiled generation”, the same configuration of img2img, but activating “tile” mode of “ControlNet” extension or the script of “Ultimate SD Upscale”, but for that. For the last method, you have to pass the generated image in txt2img to “extras” and then select a GAN model and scale it, you can also use the “4x-UltraSharp” model.

-

Get more control of your creation: Use “ControlNet” extension to generate a more controlled shape of what you want, and even you can test it with sketches. Use “Scribble” or “Lineart” modes. For that, I recommend you to install this extension and then learn to how to use. There are plenty of online videos about it.

Example Prompting:

Positive prompt:

Digital illustration of a warrior woman, big castle in the background, cinematic, masterpiece, colorful

Negative prompt:

worst quality, text, artifacts, strabismus

Steps: from 20-40

CFG scale: 4-6

What comes for the future

I’m already trying to enhance the model, with better merges. If you like a better model of this version, try to keep supporting me on ko-fi, if there are more people supporting me, I can invest more time to train and enhance models, but if this doesn't happen I cannot.

I launched my first private model for my Ko-fi membership lv.1, called "eddiemauro scene" minimalistic scenery creation for rendering. If you want to access to private models, you can support me and subscribe to this membership. I will also start to upload here more models centered on product and car design.

License

Watch here a Stable Diffusion license link. In the case of this specific model, use it for whatever you want in terms of image generation. It is prohibited:

-

Upload this model to any server or public online site without my permission.

-

Share online this model without my permission, using my exact model with a different name or uploading this model and then run it on services that generate images for money.

-

Merge it with a checkpoint or a Lora, and then publish it or share online, just talk to me first. In the future,

-

Sell this model or merges using this model.

Supporting

You can follow me on my social networks. I will show my process and also design tips and tools. Also, you can check my webpage and in case of you need a design service, I work like a freelance.

https://www.facebook.com/eddiemauro.design

https://www.instagram.com/eddiemauro.design

https://www.linkedin.com/in/eddiemauro

https://www.behance.net/eadesign1

描述:

训练词语:

名称: freshMagicXLDigital3D_v10.safetensors

大小 (KB): 6973453

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success