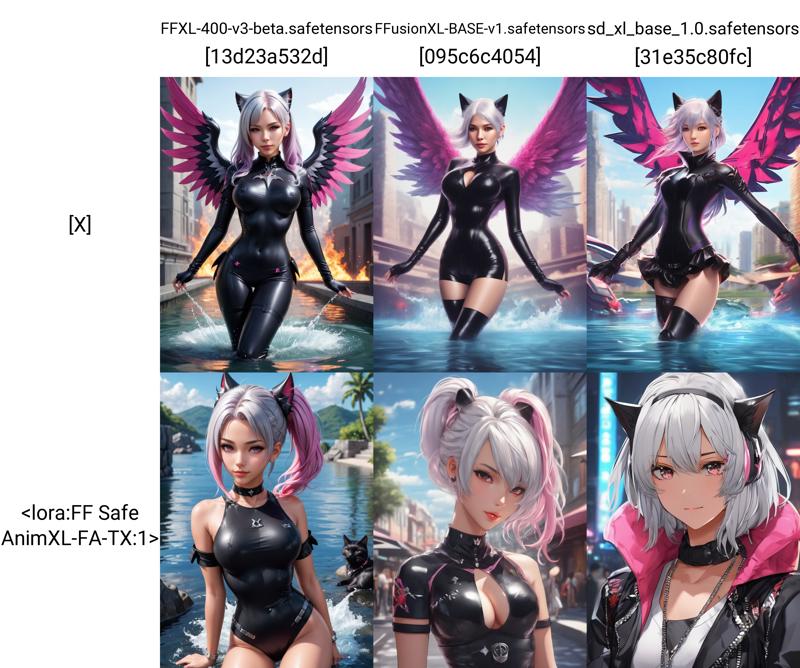

? Trained specifically for style mixing on Safe For Work (SFW) dataset.

? Text Encoder (strongly reliant on U-Net architecture):

? Lora Model:

-

Trained using the CivitAI trainer.

-

Underwent training for 16 epochs.

-

Performance: Lora showcases stable diffusion in XL settings.

? Lycoris Models:

-

Configured using this preset from LyCORIS, but with our custom tweaks.

-

Trained on 8xA6000 GPUs.

-

Underwent 20-40 epochs with a batch size of 20.

-

Module Type Breakdown:

-

LohaModule: 176

-

LoConModule: 150

-

FullModule: 26

-

LokrModule: 700

-

? Comparison:

-

CivitAI's Lora stands out in our tests, but we've yet to explore its full potential in style mixing with other models.

Feedback, collaboration, and tests are welcome! ? ?

描述:

Mixed training experiment using | lokr lora loha

More balanced dims (lower size)

module type table: {'LohaModule': 176, 'LoConModule': 150, 'FullModule': 26, 'LokrModule': 700}

@unet_target_module = [

"Transformer2DModel",

"ResnetBlock2D",

"Downsample2D",

"Upsample2D",

]

unet_target_name = [

"conv_in",

"conv_out",

"time_embedding.linear_1",

"time_embedding.linear_2",

]

text_encoder_target_module = [

"CLIPAttention",

"CLIPMLP",

]

[module_algo_map]

[module_algo_map.CrossAttention] # Attention Layer in UNet

algo = "lokr"

[module_algo_map.FeedForward] # MLP Layer in UNet

algo = "lokr"

[module_algo_map.ResnetBlock2D] # ResBlock in UNet

algo = "lora"

[module_algo_map.CLIPAttention] # Attention Layer in TE

algo = "loha"

[module_algo_map.CLIPMLP] # MLP Layer in TE

algo = "lora"

训练词语:

名称: FF-Safe-Anim-v3-LycorisFF.safetensors

大小 (KB): 401002

类型: Model

Pickle 扫描结果: Success

Pickle 扫描信息: No Pickle imports

病毒扫描结果: Success